CrossWeigh: Training Named Entity Tagger from Imperfect Annotations

Published:

Our paper “CrossWeigh: Training Named Entity Tagger from Imperfect Annotations” is accepted by EMNLP 2019 as an oral presentation.

Highlights

- We correct the test set of CoNLL03 NER. This higher quality evaluation set can be used in further research. The dataset is avalaible here.

- We design a mistake-aware framework

CrossWeighthat fits any NER model that supports weighted training.

Motivation

The label annotation mistakes by human annotators brings up two challenges to NER:

- mistakes in the test set can interfere the evaluation results and even lead to an inaccurate assessment of model performance.

- mistakes in the training set can hurt NER model training.

We address these two problems by:

- manually correcting the mistakes in the test set to form a cleaner benchmark.

- develop framework

CrossWeighfor mistake-aware training.

CrossWeigh works with any NER algorithm that accepts weighted training instances. It is composed of two modules. 1) mistake estimation: where potential mistakes are identified in the training data through a cross-checking process and 2) mistake re-weighing: where weights of those mistakes are lowered during training the final NER model.

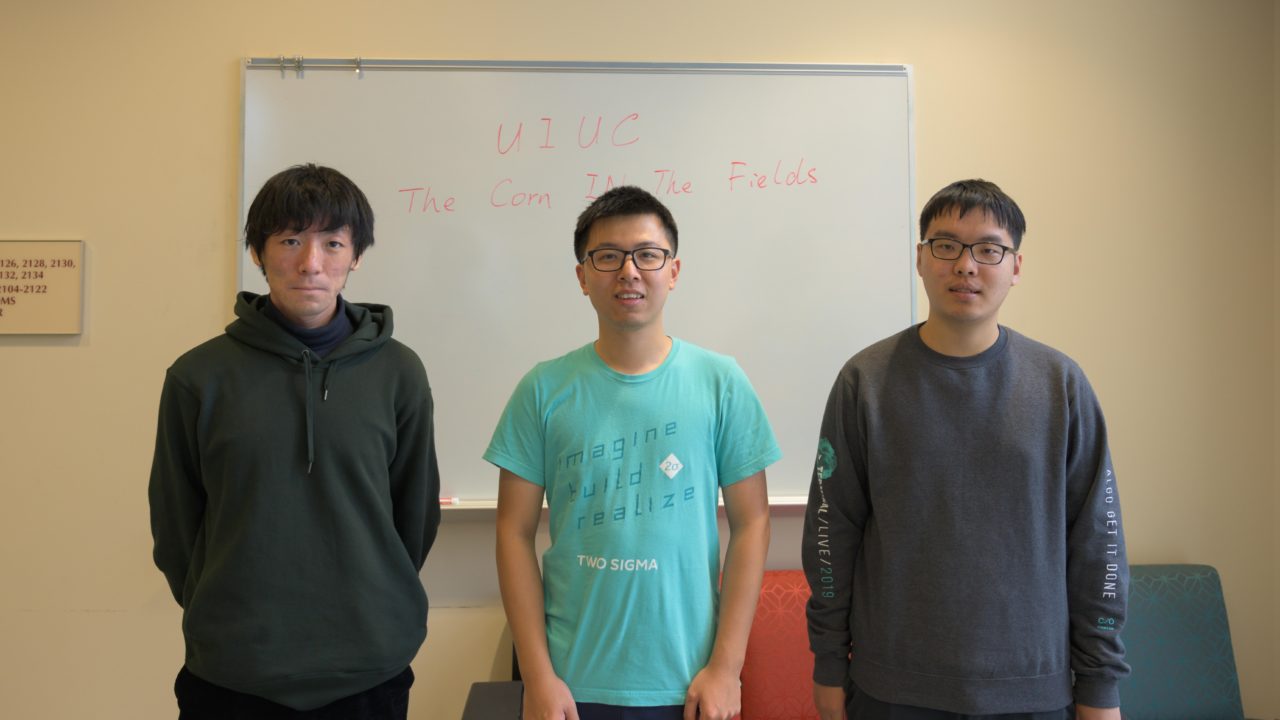

My team “TheCornInTheFields” (teammates: Jingbo Shang (middle), Wenda Qiu (left), and me (right)) won the IEEE Xtreme 13.0 competition. IEEE Xtreme is a well known programming competition that attracts thousands of participants worldwide.

My team “TheCornInTheFields” (teammates: Jingbo Shang (middle), Wenda Qiu (left), and me (right)) won the IEEE Xtreme 13.0 competition. IEEE Xtreme is a well known programming competition that attracts thousands of participants worldwide.