Cross-Lingual Ability of Multilingual BERT: An Empirical Study

Published:

Our paper “Cross-Lingual Ability of Multilingual BERT: An Empirical Study” is accepted by ICLR 2020 as a poster.

Highlights

We analyzed linguistic properties, model architecture and learning objectives that may contribute to the multilinguality of M-BERT.

Linguistic properties:

- Code switching text (or what we call word-piece overlap) is not the main cause of multilinguality.

- Word ordering is crucial, when words in sentences are randomly permuted, multilinguality is low, however, still significantly better than random.

- (Unigram) word frequency is not enough, as we resampled all words with the same frequency, and found almost random performance. Combining the second and the third property infers that there is language similarity other than ordering of words between two languages, and which unigram frequency does not capture. We hypothesize that it may be similarity of n-gram occurrences.

Architecture:

- Depth of the transformer is the most important.

- Number of attention heads effects the absolute performance on individual languages, but the gap between in-language supervision and cross-language zero-shot learning didn’t change much.

- Total number of parameters, like depth, effects multilinguality.

Learning Objectives:

- Next Sentence Prediction objective, when removed, leads to slight increase in performance.

- Even marking sentences in languages with language-ids, allowing BERT to know exactly which language its learning on, did not hurt performance

- Using word-pieces leads to strong improvements on both source and target language (likely to depend on tasks) and slight improvement cross-lingually comparing to word or character based models.

Motivation

Multilingual BERT (M-BERT) has shown surprising cross lingual abilities — even when it is trained without cross lingual objectives. In this work, we analyze what causes this multilinguality from three factors: linguistic properties of the languages, the architecture of the model, and the learning objectives.

Please refer to our paper and github (tentative, to be moved to CCG) for more details.

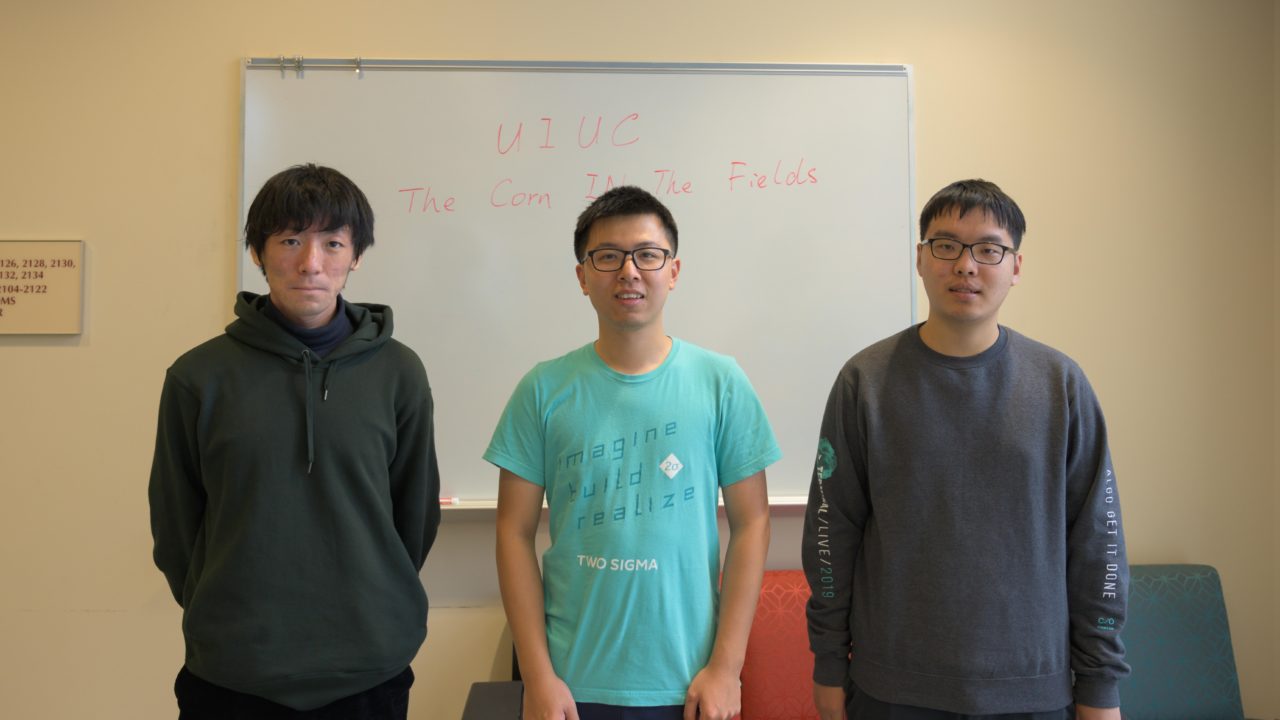

My team “TheCornInTheFields” (teammates: Jingbo Shang (middle), Wenda Qiu (left), and me (right)) won the IEEE Xtreme 13.0 competition. IEEE Xtreme is a well known programming competition that attracts thousands of participants worldwide.

My team “TheCornInTheFields” (teammates: Jingbo Shang (middle), Wenda Qiu (left), and me (right)) won the IEEE Xtreme 13.0 competition. IEEE Xtreme is a well known programming competition that attracts thousands of participants worldwide.